As Drupal is used more and more for large, high-traffic websites, it has become very important to focus on performance enhancements to Drupal and the underlying server infrastructure. In doing so, we are looking at improving both the number of requests that a Drupal site can handle, and to decrease the amount of time required to load a particular page, or an entire site in general. There are a number of ways to accomplish these performance goals, and while there may not be one "right way," there are some general best practices that should be considered when planning to deploy a large Drupal site -- or even after you've already launched a site and realize that the performance is not what you had hoped for.

Server Architecture

There are various ways to design a server architecture in order to assure that your website will scale as needed in the future. Typically, it is best to separate out servers based on different roles, so that depending on the site's bottlenecks, we can scale certain portions of it independently of others. The roles can be separated generally along these lines:

- Web Server: httpd, nginx, etc. with PHP

- Database Server: MySQL, PostgreSQL

- Front-End Cache: Varnish, potentially a CDN

- Other Caches: Memcache (alternative caching mechanism for Drupal)

- Other External Applications: For example, a Solr server can be used to provide faceted search functionality in Drupal and offload search traffic from the SQL database.

Not all of these need to be on separate servers in all cases. Some smaller sites could get away with having all of these run on only one server. For larger sites, I would generally start with two servers for a basic site: one for web services (with services like Varnish and Memcache running on the web server), and separate the database out onto its own server so that it can be precisely tuned and be guaranteed resources.

Web Server

A web server needs to have enough RAM to support your expected concurrent traffic. Consider that it also may be running APC and Memcache and that it will be running both httpd and PHP processes, which could average about 100MB each or more depending on your site and the modules in use. An average web server would have around 8-16GB of RAM and 4 to 16 cores. (Or more, if you can afford it!)

For very busy sites, it's also important to configure the KeepAlive settings so that processes aren't remaining open in a waiting state too frequently (for too long or for too many clients). In some cases, it's best to disable KeepAlive completely or not expose Apache's KeepAlive functionality directly to clients -- for example, by using Varnish in front of Apache.

Apache httpd Configuration

Based on your available resources (mostly looking at RAM), httpd needs to be configured to not have more processes than could be supported with the available RAM on your server. If you have other services running on the server, those need to be taken into consideration as well. Generally this means reducing ServerLimit and MaxClients from the defaults in httpd.conf. Also, setting MaxRequestsPerChild to something around 2000-4000 will help ensure that httpd processes get cycled more often, helping to reduce memory usage and potential memory leaks within a process.

APC

APC is a PECL module for PHP which provides an opcode cache for PHP. This keeps pre-compiled PHP code cached so that the site's PHP code doesn't need to be compiled with each page load, which is a major performance gain. APC should be considered mandatory for every web server running a Drupal site; there is no good reason not to use it or some other opcode cache.

APC is a PECL module for PHP which provides an opcode cache for PHP. This keeps pre-compiled PHP code cached so that the site's PHP code doesn't need to be compiled with each page load, which is a major performance gain. APC should be considered mandatory for every web server running a Drupal site; there is no good reason not to use it or some other opcode cache.

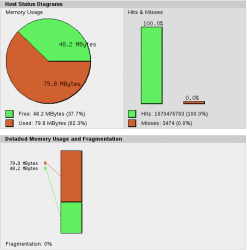

Once installed, you may need to increase the memory allocated by default. For most Drupal sites, you will probably need at least 64MB, potentially more, based on how many modules and other code you have on your site. There is an apc.php script included in the PECL module which, if copied to your webroot, will give you a graphical representation and APC stats/configuration information. NOTE: Do NOT leave apc.php in a publicly accessible location. Review the APC status periodically, and increase allocated memory if you notice it's low on space or has high fragmentation. You should be seeing a very high cache hit rate if APC is configured properly -- 99% or better after running for a while.

There are also some other configuration options that can help performance, but they are beyond the scope of this article. Review the APC section of the PHP website for more information on APC configuration settings.

Database Server

The server specifications required for a database server can vary greatly based upon your site's needs, though in general we would look for at least 4- to 16- cores and 8GB RAM minimum. For performance and data durability, it's recommended to use the InnoDB MySQL back-end as much as possible. When using InnoDB, it's best to have enough RAM to fit all of your commonly used tables so that you can minimize disk access on the server; DB servers with 64GB or more are common for large sites.

- MySQL settings: We could go into an entire document on MySQL settings alone, but to keep this simple, these are the main configuration settings to look at:

- default_storage_engine -- Should be innodb

- innodb_buffer_pool_size -- As large as possible based on RAM size for the server, and accounting for other MySQL RAM needs and any other services that may be running. In general, this should be 60-80% of the DB server RAM size, giving MySQL ample RAM without sending the server into swap.

- tmpdir -- In many cases it's a good idea to create a ramdisk on the server to be used as the MySQL tmpdir so that temp tables that MySQL needs to write to "disk" are actually using RAM instead. The size of this ramdisk will vary based on the data specific to the site, though in general something in the 1-4GB range is sufficient.

- long_query_time -- Typically set to 1 (second) to log any query that takes longer than that to run. This will output to whatever file you define in the log_slow_queries setting.

- max_connections -- Setting this too high can allow too many concurrent queries from the web servers and could cause a downward spiral of performance on the DB server. Set this too low and web servers will be unable to connect if there are already a lot of connections. The number here depends on the number of web servers you have running for the site as well as how much the DB server hardware can support. Something in the 100-200 range is common.

- innodb_flush_log_at_trx_commit -- InnoDB defaults to full "ACID" compliance. Most web sites do not need this and committing changes to disk after every change can be a huge performance hit. Setting this to 2 or 0, depending on the data durability requirements, can greatly improve performance.

- Slow log: Once the slow log is enabled as described above, MySQL will log any slow queries to the slow log. This can be analyzed with tools such as pt-query-digest from the Percona Toolkit (used to be Maatkit).

- mysqlreport: this tool gives insight to MySQL variables and performance since the server started. See http://tag1consulting.com/MySQL_Monitoring_and_Tuning for more information on using mysqlreport.

Front-End Cache

A front-end cache in front of your web server can be very beneficial, especially when your site is mostly anonymous traffic, but it can also have some small performance improvements even with logged in traffic because you can have it cache images, javascript and css, saving requests from hitting httpd which can be much more resource-intensive.

Varnish has a very flexible configuration such that it could be used in a number of different settings. For some sites it makes sense to only cache static content such as images, css, and javascript. For others, it gets more involved and provides a cache for full page content. In those cases, it is very important to use Pressflow if you are using a Drupal 6 site, or use Drupal 7+. Drupal 6 has some design limitations that make it impossible to do good caching using Varnish or similar; these are improved in Pressflow and in newer Drupal versions.

Memory requirements for Varnish will vary based on the amount of traffic and how much data is being cached. If you are caching pages for logged in users, the cache size will grow extremely fast because you need to store one copy of each page for each individual user. For this reason and others, typically if Varnish is doing page caching, it is limited to only anonymous traffic and logged in users are passed on without caching.

Another possibility for a front-end cache is to use a CDN (Content Delivery Network) service. A CDN will cache files for you similar to what we aim to accomplish with Varnish, but they will be cached on the CDN servers, which can offload traffic from your servers as well as potentially being located on a closer/faster network for site visitors by utilizing geographically-distributed servers.

Profiling Code

There are a number of methods and tools for profiling code and front-end performance. Each of these serves a slightly different purpose, but together they provide a good overview of areas that are in need of performance improvements.

- xhprof -- xhprof is a PHP profiler that has a simple HTML interface. When enabled in Drupal (via the xhprof module), xhprof profiling data will be available for each page load. This gives the ability to see what functions are being called how many times as well as cpu, memory, and runtime information for each.

- yslow -- yslow is a tool provided by Yahoo! to identify problem areas in a web page. It analyses things like number of http requests, cookies, css/js compression and aggregation and provides an overall grade for the site with specific recommendations for the problems it finds.

- Firebug/Chrome developer tools -- Both Firefox and Chrome browsers provide tools for analysing web page elements, load times, and getting some insight into which parts of a page load are taking a long time. Firefox plugins ‘Firebug' and ‘Live HTTP Headers' can be especially useful.

- NewRelic -- NewRelic is a paid service which provides performance monitoring for all sorts of applications including PHP, Java (Solr), and MySQL performance. This allows collection of historic performance data, as well as digging into performance data to see which pages are slow, and even narrowing that down to specific function/database calls within a particular page.

Alternate Drupal Cache - Memcache

Memcached is a key/value store that can be used to alleviate database load by storing Drupal cache (and even session) data. The Drupal memcache module gives the option to store particular caches in memcache or in the database so that it can be customized to the needs of different sites. The benefit of memcache is two-fold in most situations: not only are you able to access the cache data faster in memcached than in MySQL, but also less queries are being run on the database, allowing other queries to run with higher performance due to less overall DB load.

Memcached can be run directly on the web server, spread out as part of a cluster on multiple web servers, or run on its own dedicated servers. Generally this would be set up as a cluster over all of the web servers used for a site. In that situation, each web server utilizes memcached over all of the web servers, not just locally -- this way the data is being shared among all the servers, providing better caching performance and continuity, in case a user hits a different server in a subsequent page request.

There are other considerations for setting up memcached, such as the number of bins you want to run (allowing you to separate out different caches into different memcache bins), and how much space to allocate to each bin. Be careful to watch for evictions in a memcache bin, as this is an indication that your bin size is too small and can lead to very bad performance.

Pressflow

Pressflow is a modified version of Drupal which includes many changes, specifically to address performance issues, in particular MySQL 5 exclusive support, path alias caching, reverse proxy support and lazy session initialization. This is especially useful for Drupal 6 since Drupal 7 includes many performance improvements that are not available in D6. Pressflow has backported many of these patches and includes other improvements as well. Tag1 Consulting maintains a custom branch of Pressflow 6 which includes even more improvements that aren't included in the official Pressflow 6 branch. Some additional patches included in the Tag1 fork include a module_implements patch which can greatly improve performance on sites where many modules are in use, as well as fixes for the theme registry and schema cache.

Drupal Configuration

There are a number of factors that contribute to the performance of a Drupal site. Above, we outlined ways to profile the code in order to track down areas of poor performance. There are also some general Drupal configurations that can help improve performance. Here are a few Drupal settings and best practices which can have a large impact on the performance of a site. Nathaniel Catchpole mentions some of these issues in his article, "Performance and Scalability in Drupal 7," which was published in Issue 1 of Drupal Watchdog.

Limit the Number of Modules

It may not be obvious to everyone, but when you increase the number of modules used on a Drupal site, you are eventually going to notice a drop in performance. This is caused by a number of factors, and there are some ways to minimize the impact that a large amount of modules will have on a site, but the best practice is to limit the number of modules as much as possible. A general rule of thumb is to try to stay below 100 modules, but that number is not a hard limit. There are ways to improve performance in general when dealing with a large number of modules; the Tag1 Pressflow fork includes a number of patches to address these issues.

Views

The Drupal Views module offers a very convenient way to dynamically display content on your site. The downside is that the automated queries generated by Views are typically not well optimized, and can quickly bring a database server to its knees on a busy site or one with a lot of content. Many times we'll see views queries doing many joins between large tables -- sometimes this can be avoided by changing your view slightly, if that is an option; in other cases it may be possible to write a more optimized query in a custom module and use that instead.

For debugging purposes, there is an option in the Views module settings which enables adding the view name to the query so that it will show up in the MySQL slow log. This helps to easily tie a slow query to a particular view.

If your view is cachable, you should definitely do so by enabling the cache option for that particular view. One additional module which provides a way to cache views content is Views Content Cache. This takes views caching even further by allowing caching of Views content and provides a highly-customizable set of options for updating the cache based on things like new comments, new content of particular content types, etc.

In general, caching a view can greatly help performance; however, there are certain views for which you will not see any noticeable difference in performance with caching enabled. This is notably the case when you have a view which has a wide range of input values (arguments), e.g. node IDs. The cached views results will populate your cache, but the hit rate isn't going to be great because you end up with a different cached view for each different argument used.

Drupal Performance Settings

The Drupal page cache is great for a small website that's mostly static, e.g. where content doesn't change very often. However, if your site is more dynamic, and especially if it has a lot of pages, it is less useful. Still, the same applies as for the views cache: It will level load spikes for you.

The Drupal core CSS and Javascript aggregation is very helpful but its technique can be improved. The advagg module provides an alternate method for handling JS and CSS. It makes sure that your basic CSS and JS isn't downloaded for each page that a user requests by wrapping it up as a separate bundle and reusing the bundle for each page. This strategy will lower your bandwidth usage and reduce the time the user's browser takes to render the page.

Comments

I wrote a blog post of how I handle it on my personal site:

http://www.helloper.com/better-front-end-performance-in-drupal

Curious to know when this article was written. Much of finding on this type of documentation has been outdated (even one written by Dries), this article seems pretty recent.

This was originally written for Drupal Watchdog Vol. 2, Issue 1, which was published in March 2012.

It is also possible to improve Drupal website's performance by changing your host and stack. I have hosted my website with Cloudways on Google Cloud. Cloudways platform (https://www.cloudways.com/en/drupal-cloud-hosting.php ) uses Apache, Nginx, Memcached, Varnish and PHP-FPM as a stack. These packages have really improved the performance of my website. Apart from this, I have also enable Cloudflare CDN and HTTP/2 as well that has further improved the performance.