There are only two hard things in Computer Science: cache invalidation, naming things and off-by-one errors.

A common practice for scaling Drupal sites is to use Varnish and ESI. In this scenario, if the cache is warm, Varnish reads the pieces of the page from the cache, puts them together and sends it back to the client. For example, if the rendered HTML for a node is stored separately then we need to do that only once and it can be reused on all pages where that node appears (with the same display mode, for example 'teaser').

If the cache needs regeneration, then Varnish needs to issue a request for every piece of the page to Drupal, which means a lot of possible requests: we recently did work on a high traffic client website which had 120 subrequests on the homepage. Worse, Varnish executes subrequests serialized, one after the other.

As you can imagine, 120 serialized subrequests can take a while; if there is stale data, you might want to enable "grace mode" and temporarily serve the stale data while updated data is generated. Another fearful problem is "runaway page generation"—where a bug in your app churns out bogus URLs and then a bot crawls them, causing the cache to fill and start dropping valid cache entries.

When we needed to architect a new website for our client, we looked for an alternative solution. The original idea was to turn the idea of "Varnish pulls content out of Drupal and puts it together via ESI" into "Drupal pushes content into a distributed key-value store and nginx puts it together". We needed to pick a database that could replicate and put a quota on writing (to solve the runaway generation problem), and just error out if we tried to write more than that. The worst thing that could happen in that case is that we don't regenerate the important data and we serve stale cache entries, but at least the site will be up.

The hard problem to solve is when and what to regenerate. With a cache solution, you can set up a "time to live" or you could even force it to regenerate everything; most likely you don't want to do that. For the example site, this is fairly easy because there are precise events that define when things can change: a node gets written, a user buys something, a user logs in, and time itself. For the time-based events, we are running a drush 'tick' command which queries the database to see whether there are new licenses available or old ones expiring and queues pieces of content to be regenerated (nodes, views, pages etc).

Getting back to the actual details of the infrastructure, we needed a distributed, fast key-value store. It quickly became apparent that Redis was the best match for us. It's a key-value store where the values can be data structures. It also can do data replication—although primitively; every time the link is re-established, it retrieves the whole dataset from the master. However, with a small dataset combined with the fact that it serves stale data while re-syncing, this can be a viable solution.

Next, we looked for ways to interface Redis to nginx. There are third party nginx modules to do this interfacing, but we had a few problems with those for various reasons. Also, we were not quite sure how stable all the necessary third party modules (one of the Redis modules, then perhaps echo/eval or lua) would be. Aggravating the stability concerns is the fact that nginx is changing the module APIs even in stable releases.

Luckily enough, there is a small REST front-end for Redis, called Webdis. This—much like nginx itself—follows an evented model and is very fast. The whole of Webdis clocks in about 14,000 lines of C code and half of that comes from third party libraries (hiredis, jansson, http parser). Jeremy Zawodny praises it and claims he is using it because Webdis can format Redis replies as JSON—which we don't use yet but may in the future. All this gives us a whole lot more confidence in the stability department than a third party nginx module (worse, several of them).

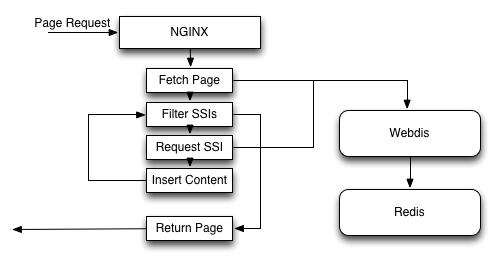

Now we can use the SSI capabilities of nginx for the "stitch together" logic. So the process is: request comes in; nginx issues a request to Webdis which reads the pieces out of Redis and serves them back to nginx; SSI processing happens recursively until we arrive at the final page. Diagram below.

The necessary nginx configuration is not too complicated and we do not expect it to change much. To make things a lot faster, we are using the "structures" capabilities of Redis by not asking for one rendered node out of Redis in one subrequest, but as many as possible -- so if a view shows 10 nodes then we can handle that with a single request. This dropped the aforementioned 120 subrequests to 14. Also, nginx processes SSI subrequests in parallel.

As for the Drupal-Redis integration, Drupal 7 is a dream to work with, as usual, this time because the developers do not need to be aware (much) of the whole Redis front-end business. The Drupal 7 render system has an ESI/SSI tie-in (which I have initiated but the solution is actually Damien Tournoud's excellent work) so post-render we can write any piece into Redis and replace it with an SSI include command like <!--# include virtual="/redis/node/12:teaser" -->. Here "node" is the name of the hash we use and "12:teaser" is the key in that hash (in this case, you can think of the whole Redis database as a two-dimensional array).

There is one piece left: customizations for logged in users. On login, we generate one JSON and store it into Redis using the session_id as key. On subsequent requests, we take the value of the session cookie and try to read the value out of Redis—if it succeeds, we are logged in. Similarly, one could generate blocks based on the session key.

The system as outlined has an inefficiency from a performance perspective. We are using SSI and nginx, but we aren't taking advantage of the fact that many of these SSI subrequests are often repeated. We can easily fix this by adding a proxy_cache directive in a few key places in nginx. On my laptop, Webdis tops out at 8-10,000 requests per second. With 14 subrequests, this gives you a front-end performance of 500-700 pages per second. Being dissatisfied with a mere few millions requests an hour on a laptop, we can add the proxy_cache directive with a 10 second cache in front of webdis. In addition, we can add a proxy_cache directive in front of the entire configuration, and be able to serve well above 10,000 pages per second on modest hardware (assuming a high hit rate).

At the end of the day, this means that if our website is one with relatively little content but an enormous amount of traffic we could handle that—only considering scalability, not high availability—with a single Drupal machine generating content into a Redis master and a single front-end with nginx, Webdis, and a Redis slave on it.

Comments

This seems like a very interesting idea? Could you do a BOF about it at DrupalCon Munich?

I'm interested to learn more about it!